[1]:

%run notebook_setup

If you have not already read it, you may want to start with the first tutorial: Getting started with The Joker.

Inferring calibration offsets between instruments#

Also in addition to the default linear parameters (see Tutorial 1, or the documentation for JokerSamples.default()), The Joker allows adding linear parameters to account for possible calibration offsets between instruments. For example, there may be an absolute velocity offset between two spectrographs. Below we will demonstrate how to simultaneously infer and marginalize over a constant velocity offset between two simulated surveys of the same “star”.

First, some imports we will need later:

[2]:

import astropy.table as at

import astropy.units as u

import numpy as np

import corner

import pymc as pm

import thejoker.units as xu

import arviz as az

import thejoker as tj

%matplotlib inline

WARNING (pytensor.tensor.blas): Using NumPy C-API based implementation for BLAS functions.

[3]:

# set up a random number generator to ensure reproducibility

rnd = np.random.default_rng(seed=42)

The data for our two surveys are stored in two separate CSV files included with the documentation. We will load separate RVData instances for the two data sets and append these objects to a list of datasets:

[4]:

data = []

for filename in ["data-survey1.ecsv", "data-survey2.ecsv"]:

tbl = at.QTable.read(filename)

_data = tj.RVData.guess_from_table(tbl, t_ref=tbl.meta["t_ref"])

data.append(_data)

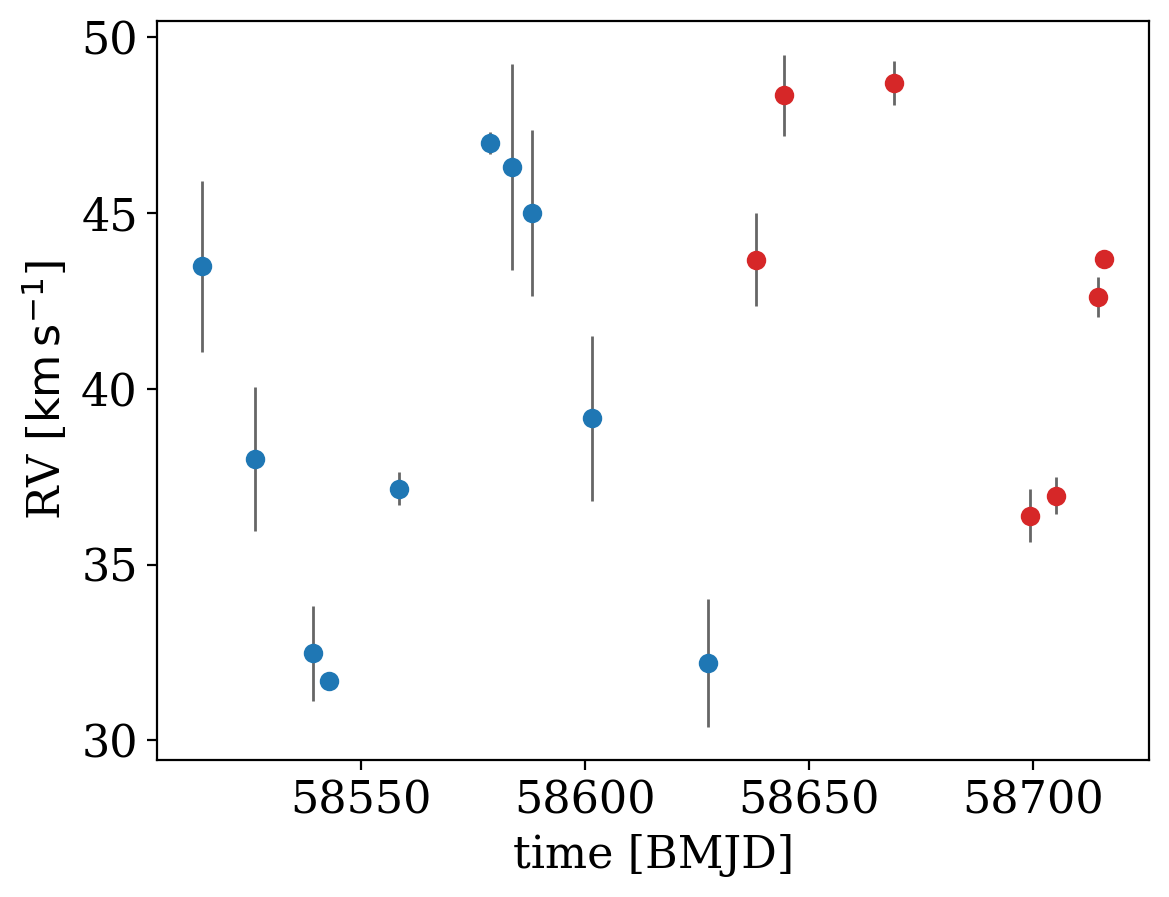

In the plot below, the two data sets are shown in different colors:

[5]:

for d, color in zip(data, ["tab:blue", "tab:red"]):

_ = d.plot(color=color)

To tell The Joker to handle additional linear parameters to account for offsets in absolute velocity, we must define a new parameter for the offset betwen survey 1 and survey 2 and specify a prior. Here we will assume a Gaussian prior on the offset, centered on 0, but with a 10 km/s standard deviation. We then pass this in to JokerPrior.default() (all other parameters here use the default prior) through the v0_offsets argument:

[6]:

with pm.Model() as model:

dv0_1 = xu.with_unit(pm.Normal("dv0_1", 0, 10), u.km / u.s)

prior = tj.JokerPrior.default(

P_min=2 * u.day,

P_max=256 * u.day,

sigma_K0=30 * u.km / u.s,

sigma_v=100 * u.km / u.s,

v0_offsets=[dv0_1],

)

The rest should look familiar: The code below is identical to previous tutorials, in which we generate prior samples and then rejection sample with The Joker:

[7]:

prior_samples = prior.sample(size=1_000_000, rng=rnd)

[8]:

joker = tj.TheJoker(prior, rng=rnd)

joker_samples = joker.rejection_sample(data, prior_samples, max_posterior_samples=128)

joker_samples

[8]:

<JokerSamples [P, e, omega, M0, s, K, v0, dv0_1] (5 samples)>

Note that the new parameter, dv0_1, now appears in the returned samples above.

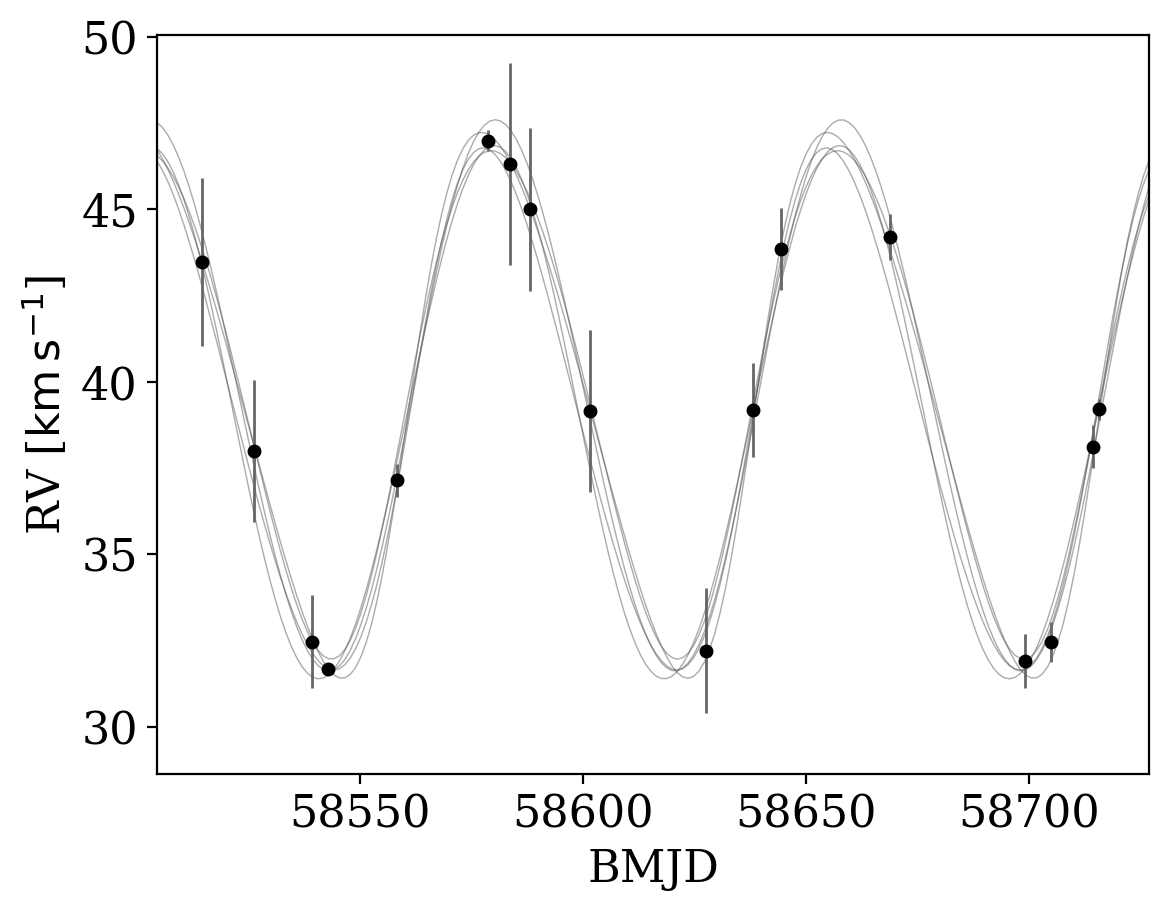

If we pass these samples in to the plot_rv_curves function, the data from other surveys is, by default, shifted by the mean value of the offset before plotting:

[9]:

_ = tj.plot_rv_curves(joker_samples, data=data)

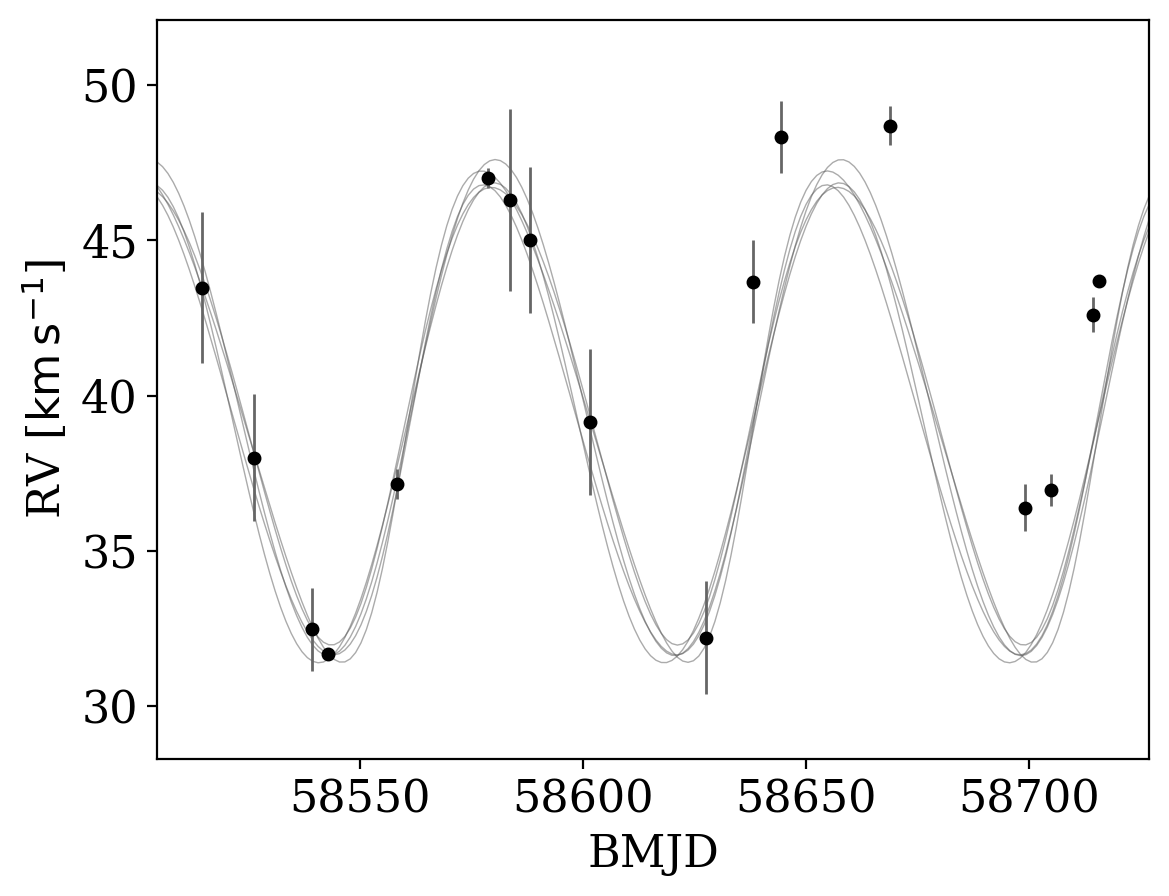

However, the above behavior can be disabled by setting apply_mean_v0_offset=False. Note that with this set, the inferred orbit will not generally pass through data that suffer from a measurable offset:

[10]:

_ = tj.plot_rv_curves(joker_samples, data=data, apply_mean_v0_offset=False)

As introduced in the previous tutorial, we can also continue generating samples by initializing and running standard MCMC:

[11]:

with prior.model:

mcmc_init = joker.setup_mcmc(data, joker_samples)

trace = pm.sample(tune=500, draws=500, start=mcmc_init, cores=1, chains=2)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Sequential sampling (2 chains in 1 job)

NUTS: [dv0_1, e, __omega_angle1, __omega_angle2, __M0_angle1, __M0_angle2, P, K, v0]

Sampling 2 chains for 500 tune and 500 draw iterations (1_000 + 1_000 draws total) took 55 seconds.

We recommend running at least 4 chains for robust computation of convergence diagnostics

[12]:

az.summary(trace, var_names=prior.par_names)

/opt/hostedtoolcache/Python/3.11.8/x64/lib/python3.11/site-packages/arviz/stats/diagnostics.py:592: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

[12]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| P | 76.947 | 0.566 | 75.915 | 78.044 | 0.045 | 0.032 | 164.0 | 382.0 | 1.01 |

| e | 0.054 | 0.046 | 0.000 | 0.139 | 0.004 | 0.003 | 155.0 | 419.0 | 1.01 |

| omega | -0.081 | 1.742 | -3.138 | 2.477 | 0.469 | 0.339 | 24.0 | 283.0 | 1.11 |

| M0 | -0.576 | 2.214 | -3.139 | 2.955 | 0.162 | 0.150 | 288.0 | 566.0 | 1.01 |

| s | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 1000.0 | 1000.0 | NaN |

| K | 0.011 | 7.766 | -8.035 | 8.069 | 5.446 | 4.598 | 3.0 | 110.0 | 1.83 |

| v0 | 39.493 | 0.235 | 39.042 | 39.909 | 0.015 | 0.010 | 252.0 | 361.0 | 1.00 |

| dv0_1 | 4.284 | 0.483 | 3.420 | 5.192 | 0.037 | 0.026 | 175.0 | 556.0 | 1.00 |

Here the true offset is 4.8 km/s, so it looks like we recover this value!

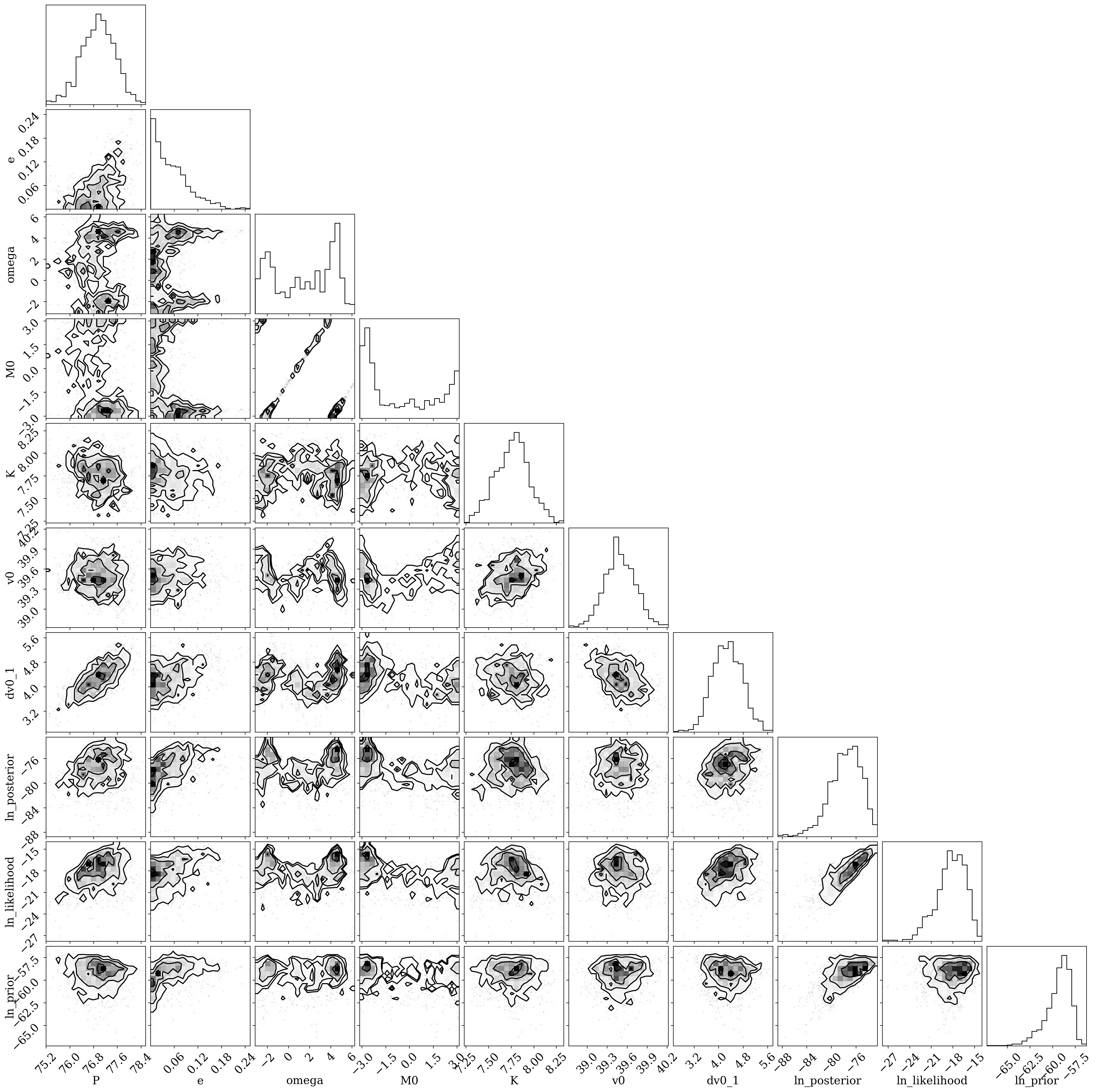

A full corner plot of the MCMC samples:

[13]:

mcmc_samples = tj.JokerSamples.from_inference_data(prior, trace, data)

mcmc_samples = mcmc_samples.wrap_K()

[14]:

df = mcmc_samples.tbl.to_pandas()

colnames = mcmc_samples.par_names

colnames.pop(colnames.index("s"))

_ = corner.corner(df[colnames])

WARNING:root:Pandas support in corner is deprecated; use ArviZ directly