[1]:

%run notebook_setup

Reproducing Swihart et al. 2019#

In this science demo tutorial, we will reproduce the results in Swihart et al. 2019, who used optical spectroscopic follow-up of a companion to a millisecond pulsar to constrain the mass of the pulsar. We will use The Joker to constrain the orbit of the system, assuming circular orbits (as done in the paper), and then continue sampling using MCMC (as implemented in pymc3) to compare our results.

First, a bunch of imports we will need later:

[2]:

import arviz as az

import astropy.units as u

import corner

import matplotlib.pyplot as plt

import numpy as np

import pymc as pm

import pymc_ext as pmx

import pytensor.tensor as pt

import thejoker as tj

import thejoker.units as xu

from astropy.io import ascii

from astropy.time import Time

%matplotlib inline

WARNING (pytensor.tensor.blas): Using NumPy C-API based implementation for BLAS functions.

[3]:

# set up a random number generator to ensure reproducibility

seed = 42

rnd = np.random.default_rng(seed=seed)

Load the data#

We will start by loading the radial velocity data from SOAR/Goodman, copy-pasted from Table 1 in Swihart et al. 2019):

[4]:

tbl = ascii.read(

"""2457945.5419639 -119.5 6.5

2457945.6301728 -16.9 7.2

2457956.5212405 -123.1 6.8

2457956.5352468 -107.9 6.4

2457956.5546942 -95.8 8.1

2457956.5687017 -65.6 8.2

2457956.5948590 -57.2 7.0

2457956.6088705 -38.0 6.0

2457966.4981876 -27.2 7.6

2457966.5122271 -18.4 20.1

2457966.5399208 38.7 7.2

2457966.5608956 49.6 8.7

2457966.5914866 115.1 10.2

2457967.5998999 -1.5 12.8

2457996.4786139 231.8 8.4

2457996.4931540 238.7 8.0

2457996.5105239 228.8 9.4

2458001.4795192 -144.0 11.4

2458001.4935388 -124.6 10.2

2458139.7564130 -136.8 7.7

2458139.7704090 -117.1 6.8

2458140.7400266 -194.5 9.8

2458140.7540222 -166.4 8.3

2458140.7770050 -150.8 7.4

2458140.7910007 -158.5 8.2

2458161.6721983 -121.2 7.9

2458161.6872977 -107.7 7.3

2458202.5822163 177.8 8.5

2458202.5962046 197.7 9.6

2458202.8350917 190.9 7.4

2458202.8490793 176.1 7.6

2458223.5928781 231.9 7.7

2458223.8317433 41.2 8.4

2458223.8456941 32.1 14.9

2458243.6779211 -87.6 8.6

2458243.6919415 -112.3 10.5

2458243.7115353 -125.5 9.5

2458243.7263475 -141.9 10.2

2458243.7459698 -130.3 10.9

2458247.5062024 131.9 11.5

2458247.5435496 160.5 14.2

2458278.5472619 197.1 15.9

2458278.5613912 183.7 15.7""",

names=["BJD", "rv", "rv_err"],

)

tbl["rv"].unit = u.km / u.s

tbl["rv_err"].unit = u.km / u.s

We set the scale to ‘tcb’ to indicate that these are barycentric julian dates:

[5]:

data = tj.RVData(

t=Time(tbl["BJD"], format="jd", scale="tcb"),

rv=u.Quantity(tbl["rv"]),

rv_err=u.Quantity(tbl["rv_err"]),

)

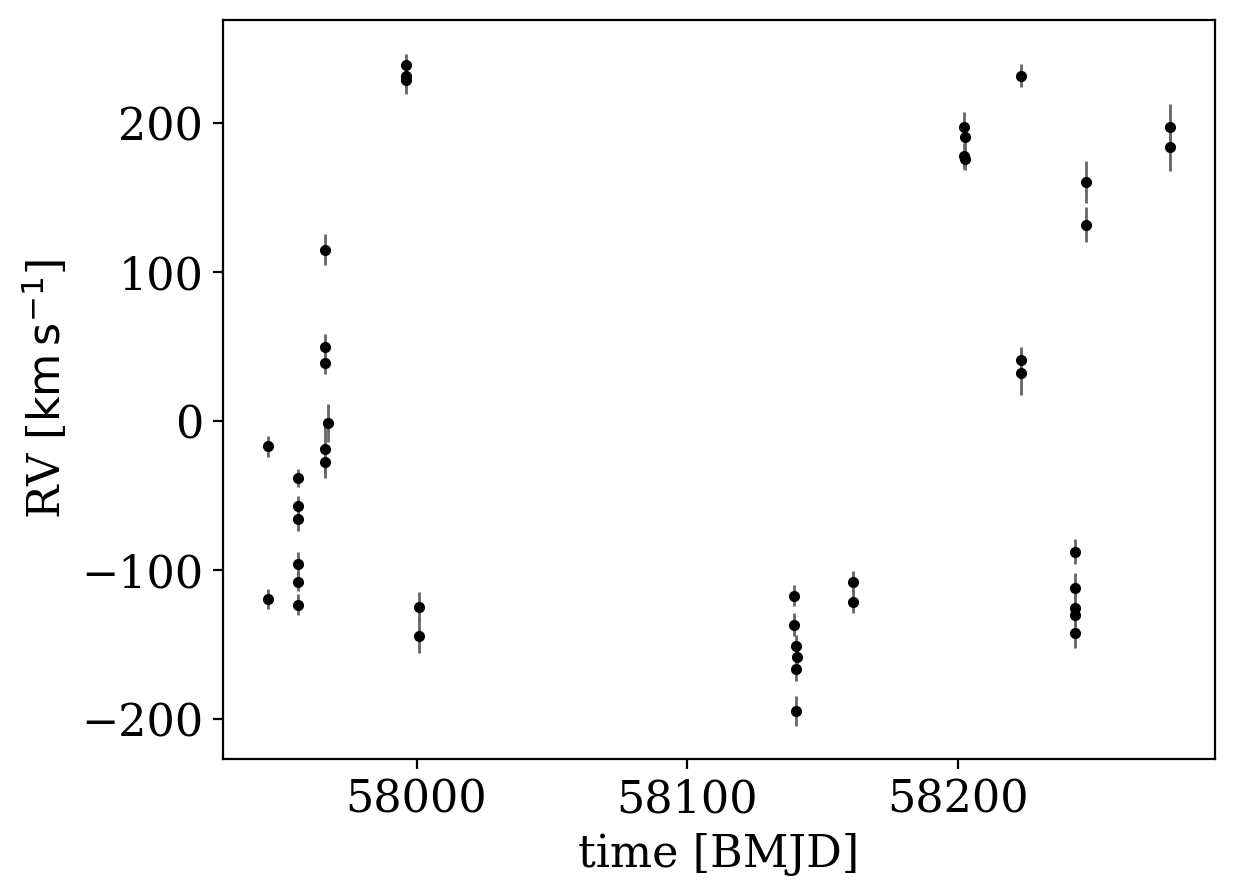

Let’s make a quick plot of the data:

[6]:

data.plot(markersize=3)

[6]:

<Axes: xlabel='time [BMJD]', ylabel='RV [$\\mathrm{km\\,s^{-1}}$]'>

We now need to specify the prior probability distribution over the Keplerian orbital parameters. We will fix the eccentricity to 0, assuming that the system has circularized. We set the scale of the prior on K to be 50 km/s at a period of 1 year: The default prior on K depends on period and eccentricity in a way that keeps the implied prior over companion mass constant. These parameter choices imply a standard deviation in companion mass of ~1.5 Msun:

[7]:

with pm.Model() as model:

# Allow extra error to account for under-estimated error bars

e = xu.with_unit(pm.ConstantData("e", 0), u.one)

omega = xu.with_unit(pm.ConstantData("omega", 0), u.rad)

prior = tj.JokerPrior.default(

P_min=0.1 * u.day,

P_max=100 * u.day, # Range of periods to consider

sigma_K0=50 * u.km / u.s,

P0=1 * u.year, # scale of the prior on semiamplitude, K

sigma_v=50 * u.km / u.s, # std dev of the prior on the systemic velocity, v0

pars={"e": e, "omega": omega},

)

Now that we have defined the prior, we can run The Joker to generate posterior orbit samples:

[8]:

# Run rejection sampling with The Joker:

joker = tj.TheJoker(prior, rng=rnd)

samples = joker.rejection_sample(

data, prior_samples=1_000_000, max_posterior_samples=100

)

samples

[8]:

<JokerSamples [P, e, omega, M0, s, K, v0] (1 samples)>

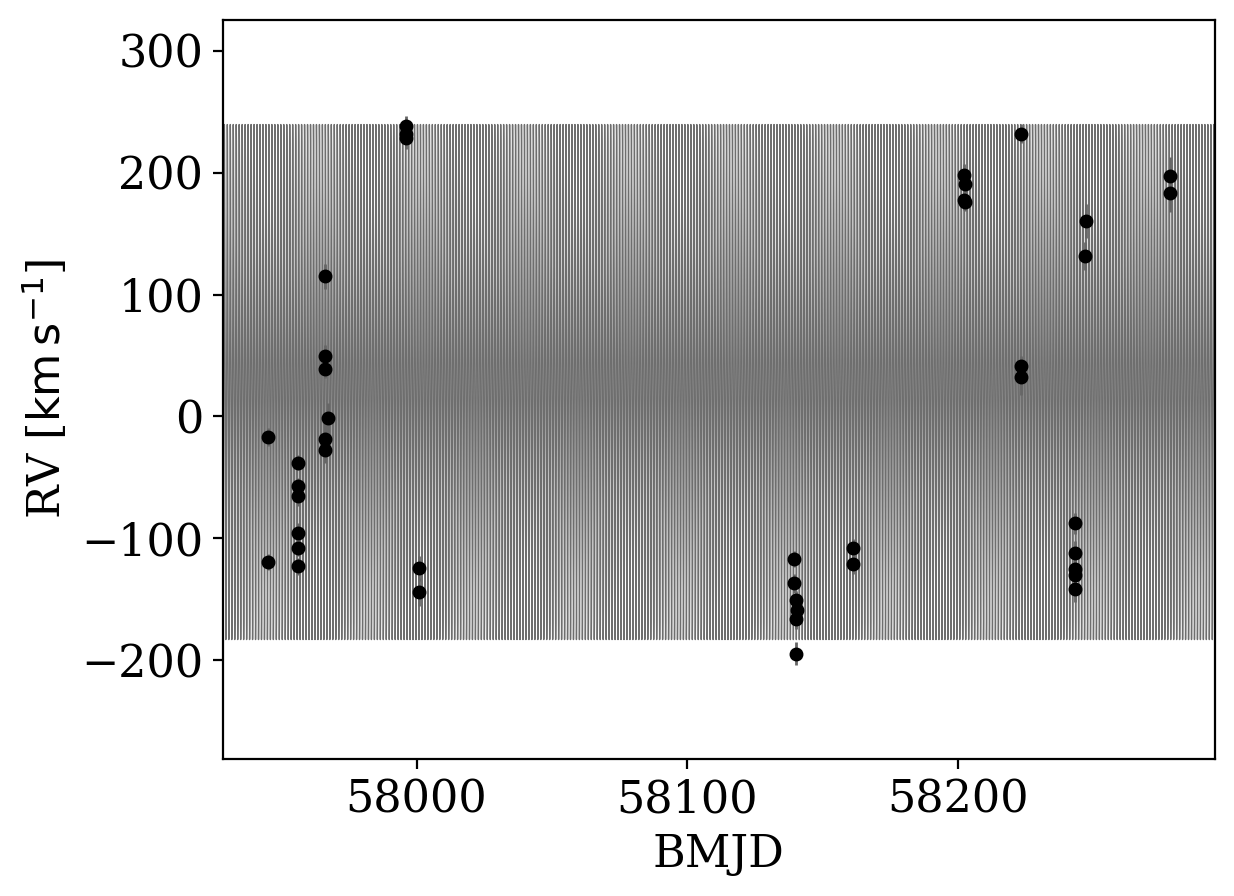

Only one sample was returned, implying that this system is very well constrained. Let’s plot the data and the orbit determined from that sample:

[9]:

_ = tj.plot_rv_curves(samples, data=data)

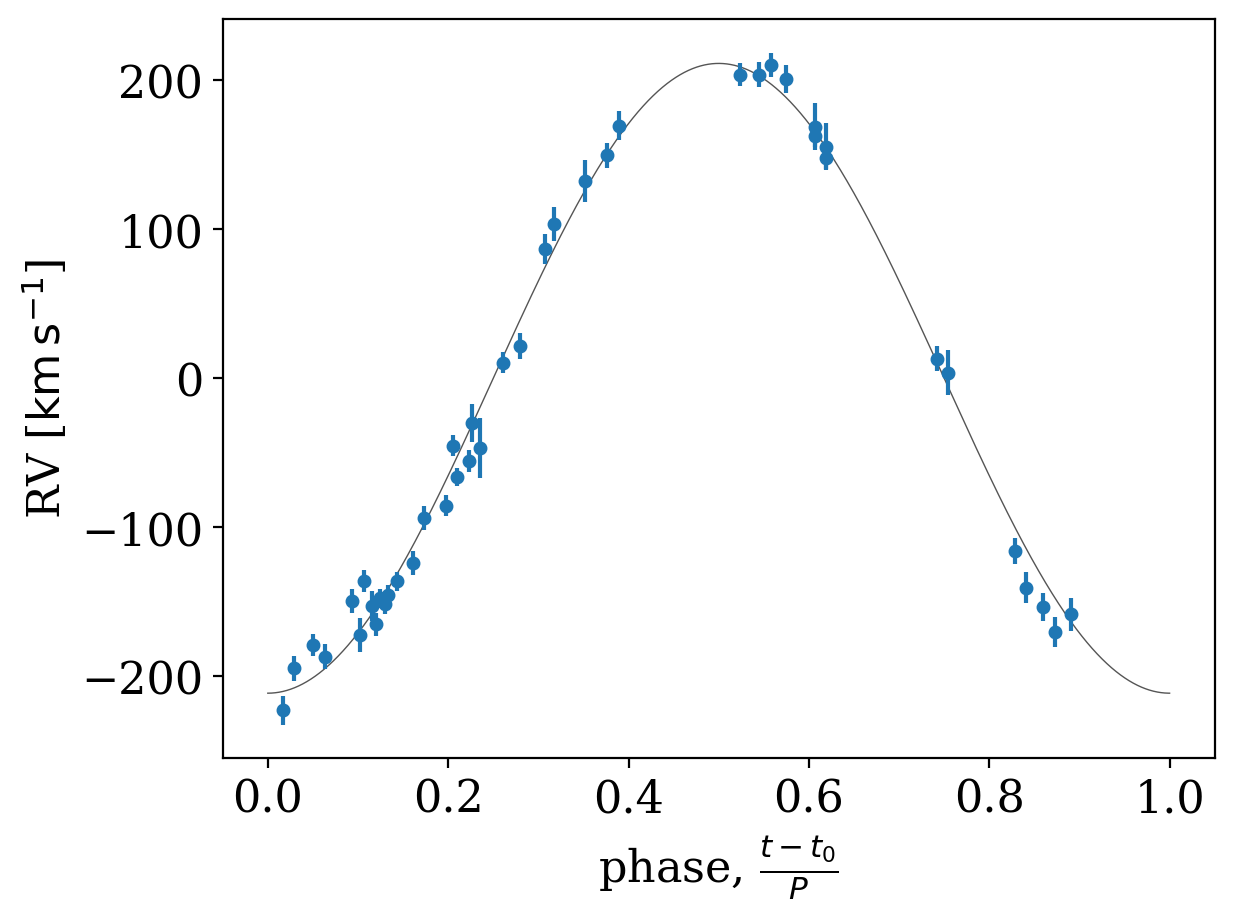

Well, that plot isn’t too useful! The period is very short and the baseline is long, so it is hard to see the orbit in the above solution. Let’s instead plot the phase-folded orbit and data:

[10]:

_ = tj.plot_phase_fold(samples[0], data=data)

Only one sample is returned from The Joker, implying that the data are very constraining. We therefore switch to using standard MCMC to continue generating posterior samples. With the new version of The Joker, this is done with pymc3, which uses Hamiltonian Monte Carlo (and the NUTS sampler) to efficiently generate samples. Setting up the MCMC sampling is similar to setting up The Joker, and we will use the sample returned from The Joker to initialize the MCMC:

[11]:

with pm.Model() as model:

# To sample with pymc, we have to set any constant variables as "Deterministic"

# objects. We can ignore eccentricity and the argument of pericenter by setting

# them both to 0:

e = xu.with_unit(pm.Deterministic("e", pt.constant(0)), u.one)

omega = xu.with_unit(pm.Deterministic("omega", pt.constant(0)), u.radian)

# We use the same prior parameters as before:

prior_mcmc = tj.JokerPrior.default(

P_min=0.1 * u.day,

P_max=10 * u.day,

sigma_K0=50 * u.km / u.s,

P0=1 * u.year,

sigma_v=50 * u.km / u.s,

pars={"e": e, "omega": omega},

)

# Now we use the sample returned from The Joker to set up our initialization for

# standard MCMC:

joker_mcmc = tj.TheJoker(prior_mcmc, rng=rnd)

mcmc_init = joker_mcmc.setup_mcmc(data, samples)

opt_init = pmx.optimize(mcmc_init)

trace = pm.sample(

tune=1000,

draws=1000,

start=opt_init,

random_seed=seed,

cores=1,

chains=2,

init="adapt_full",

target_accept=0.9,

)

Auto-assigning NUTS sampler...

Initializing NUTS using adapt_full...

/opt/hostedtoolcache/Python/3.11.8/x64/lib/python3.11/site-packages/pymc/step_methods/hmc/quadpotential.py:629: UserWarning: QuadPotentialFullAdapt is an experimental feature

warnings.warn("QuadPotentialFullAdapt is an experimental feature")

Sequential sampling (2 chains in 1 job)

NUTS: [__M0_angle1, __M0_angle2, P, K, v0]

Sampling 2 chains for 1_000 tune and 1_000 draw iterations (2_000 + 2_000 draws total) took 240 seconds.

We recommend running at least 4 chains for robust computation of convergence diagnostics

We can now use arviz to look at some statistics of the MC chains to assess convergence:

[12]:

par_names = prior_mcmc.par_names.copy()

par_names.pop(par_names.index("e"))

par_names.pop(par_names.index("omega"))

# par_names.pop(par_names.index("s"))

az.summary(trace, var_names=par_names)

/opt/hostedtoolcache/Python/3.11.8/x64/lib/python3.11/site-packages/arviz/stats/diagnostics.py:592: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

[12]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| P | 1.097 | 0.000 | 1.097 | 1.097 | 0.000 | 0.000 | 1822.0 | 1341.0 | 1.0 |

| M0 | -0.718 | 0.017 | -0.751 | -0.688 | 0.000 | 0.000 | 1467.0 | 1117.0 | 1.0 |

| s | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 2000.0 | 2000.0 | NaN |

| K | -209.494 | 2.080 | -213.856 | -206.071 | 0.078 | 0.055 | 717.0 | 934.0 | 1.0 |

| v0 | 32.044 | 1.800 | 28.382 | 35.243 | 0.048 | 0.034 | 1372.0 | 1050.0 | 1.0 |

We can then transform the MCMC samples back into a JokerSamples instance so we can manipulate and visualize the samples:

[13]:

mcmc_samples = tj.JokerSamples.from_inference_data(prior, trace, data=data)

mcmc_samples = mcmc_samples.wrap_K()

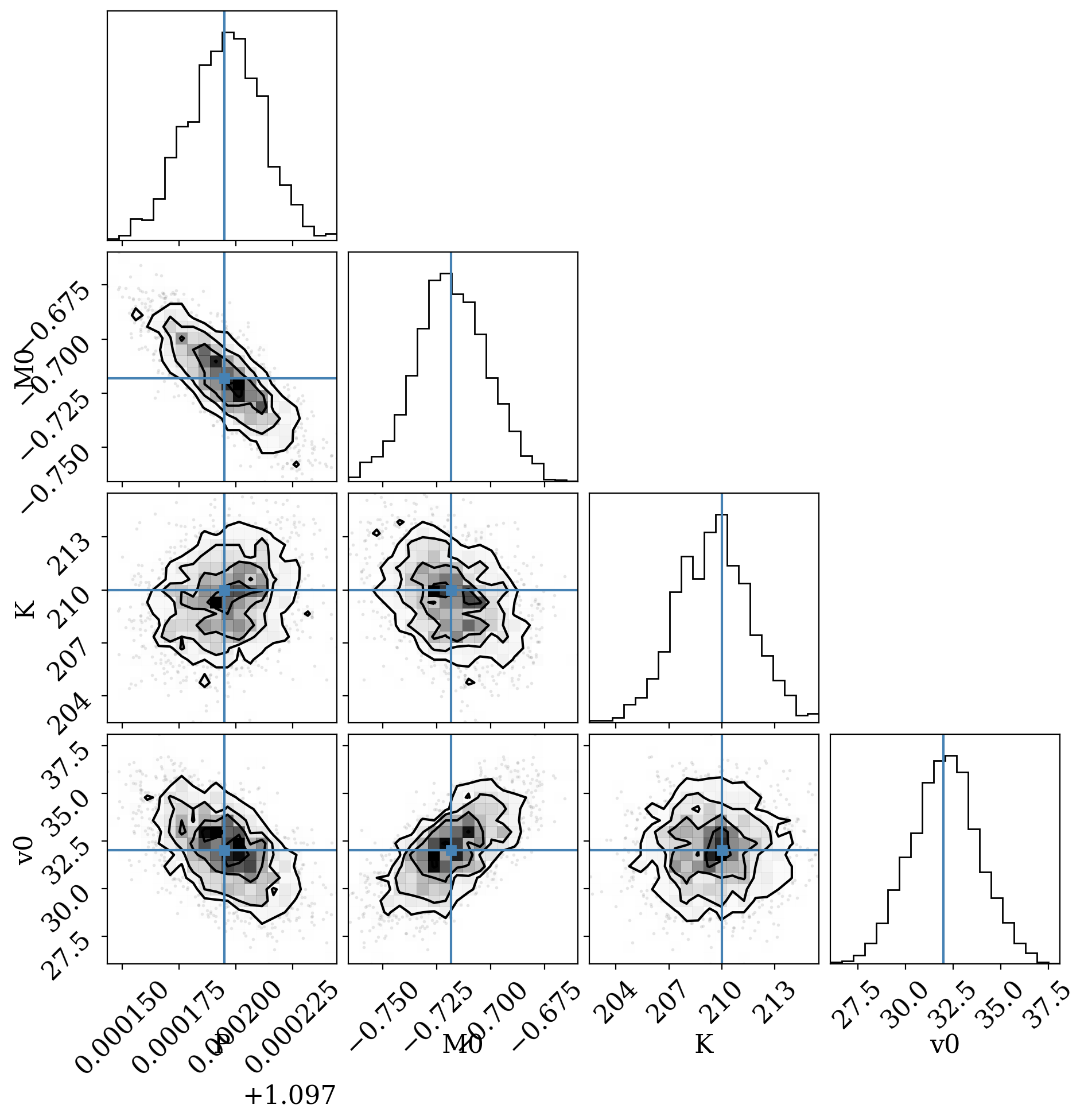

For example, we can make a corner plot of the orbital parameters (I remove any of the parameters we ignored):

[14]:

df = mcmc_samples.tbl.to_pandas()

df = df.drop(columns=["e", "s", "omega", "ln_posterior", "ln_prior", "ln_likelihood"])

true_P = 1.097195

true_T0 = 2457780.8373

true_M0 = (2 * np.pi * (true_T0 - data.t_ref.tcb.jd) / true_P) % (2 * np.pi) - (

2 * np.pi

)

_ = corner.corner(df, truths=[true_P, true_M0, 210, 32.0])

WARNING:root:Pandas support in corner is deprecated; use ArviZ directly

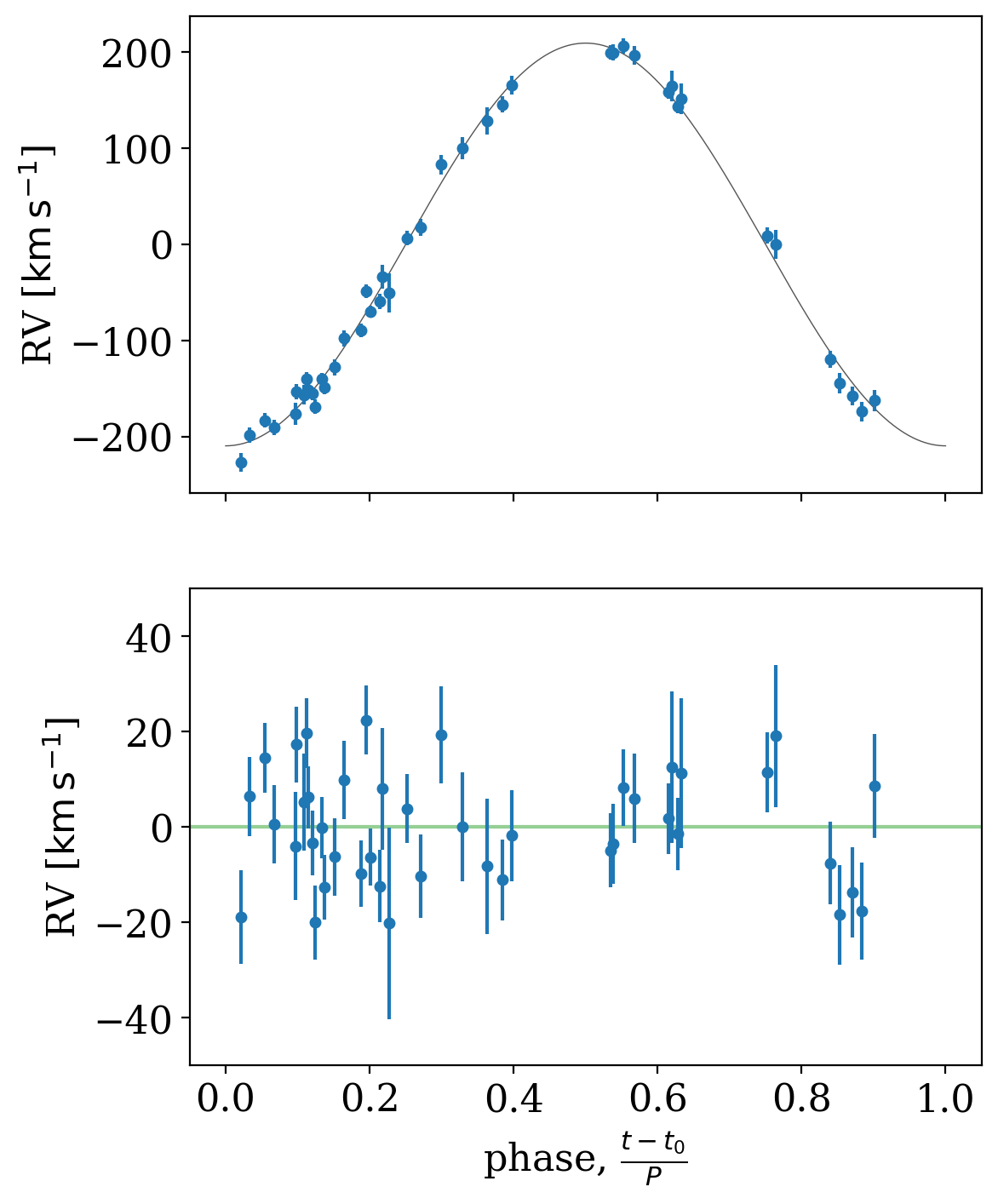

We can also use the median MCMC sample to fold the data and plot residuals relative to our inferred RV model:

[15]:

fig, axes = plt.subplots(2, 1, figsize=(6, 8), sharex=True)

_ = tj.plot_phase_fold(mcmc_samples.mean(), data, ax=axes[0], add_labels=False)

_ = tj.plot_phase_fold(mcmc_samples.mean(), data, ax=axes[1], residual=True)

for ax in axes:

ax.set_ylabel(f"RV [{data.rv.unit:latex_inline}]")

axes[1].axhline(0, zorder=-10, color="tab:green", alpha=0.5)

axes[1].set_ylim(-50, 50)

[15]:

(-50.0, 50.0)

In the end, it looks like we reproduce the results in Swihart et al. using their data and the new version of The Joker. A success for reproducible science!